What and How Many Animals are Admitted?

For consistency and plausibility of analysis we will focus on cats and dogs only.

How Animals get Admitted

How Animals Leave Shelter

Unfortunately, for both cats and dogs the top reason to leave shelter is being euthanized. But that's where similarity between them ends:

- cats don't get returned to owner anywhere near as often as dogs;

- dogs' adoption and euthanized rates are almost the same while cats get adopted far less.

From Admissions to Outcomes with Sankey

We begin analyzing this relationship with higher level (in that case) but visually appealing visualization called sankey diagram (or just sankey). It is a specific type of flow diagram, in which the width of the arrows is shown proportionally to the flow quantity. In our case each dog shelter stay contributes to the pipe size flowing from left (an intake type) to right (an outcome). With this we basically visualize conditional probabilities of dog leaving shelter with certain outcome given its admission intake type (first image illustrates transitions for cats and second does the same for dogs):

While Owner Surrender intake type flows similarly for both, Stray animals don't: cat outcomes are dominated by Euthanized but dogs are dominated by Adoption with Transfer and Returned to Owner outcomes together matching Euthanized.

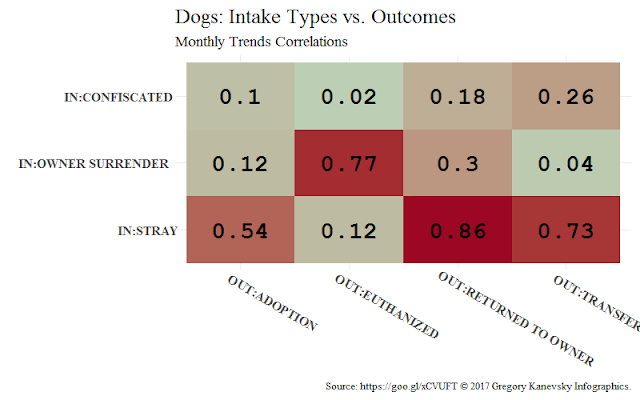

Correlations Between Admissions and Outcomes

In this case strong correlation implies (at least to some extent) causation effect due to presence of temporal relationship, consistency, and plausibility criteria (see here and here). Few observations to note:

- The highest correlation for cats (0.91) and second highest for dogs (0.77) are observed between intake Surrendered by Owner and outcomeEuthanized which is almost as obvious as unfortunate.

- Correlation between Stray and Returned to Owner for dogs is the highest at 0.86. This is great news because it means the more dogs get lost the more of them are found. The higher this correlation the healthier the city for 2 reasons: a) lost animals return home and b) larger share of stray dogs are lost ones and not abandoned (given that the city keeps collecting them).

- Unfortunately trend in Stray cats correlates highly with Euthanized. So while Stray dog trend drives adoptions and returns, Stray cat trend affects euthanizations the most (we've seen that in sankey as well).

- No trends are affected by variations in Confiscated dogs, but this is likely due to smaller share of such admissions.

- Variation in Stray dogs admitted affect every outcome (but Euthanized). Indeed Stray intake type is the largest and is almost twice as big as the 2d largest dog type Owner Surrender.

- Low correlation for dogs between Stray and Euthanized needs additional analysis because it's counter-intuitive.

Monthly Trends

Note that each plot is a 3 x 4 matrix - the same dimension as correlations matrices before. But instead of correlation coefficient each cell contains a pair of monthly trends (in fact, each correlation was computed for these exact pairs of trends, hence, a reference to its aggregation origin). Each row corresponds to an intake type (the same blue line in each) and each column to an outcome (the same red line in each). Being able to see trends over time let's record a few observations (following the matrix order top down):

- Confiscated intake trends flat for both cats and dogs with only significant spike for dogs in January 2016. This spike is so unusual, relatively big, and contained within single month or two that it begs additional investigation into probable external event or procedural change that may have caused it.

- Number of Confiscated animals is relatively low to noticeably affect outcomes. Still, if we can reduce effect of other intake types some relationships are possible.

- Owner Surrender trend correlation with Euthanized outcome is so obvious that this type of visualization is sufficient to find it. Yes, it is unfortunate but people bring their old or unhealthy pets for a reason.

- The same applies to Stray and Owner Surrender for cats only.

- Owner Surrender has significant seasonal component spiking in summer possibly due to hot weather or holiday season or both. For cats only seasonal component is also strong in Stray trend.

- Euthanized trends together with Owner Surrender which causes it to a large degree.

- Stray dogs trend slowly upwards in Dallas and it's alarming.

- Adoption also trends upwards but not steep enough to compensate for inflow of dogs into shelters. Targeted campaign to encourage more adoptions of pets in the city is due.

- Transfer outcome trending upward also compensates for the growth in stray dogs. It's not clear if it's positive or negative though as there is no means to track what happens to dogs after transfer (or is it?).

- Stray trend for dogs dipped in January 2016 exactly when confiscated trend spiked - it could be a coincidence or related - for sure something to consider when investigating further.

- For dogs Euthanized trend correlates strongly with Stray intake until the summer of 2016 when they start to diverge in opposite directions - again some policy or procedural change apparently caused it. Indeed, if we observe other outcomes we notice that Returned to Owner trend began its uptick at around the same time (indeed, after I observed this I found out about this and this - significant changes in Dallas Animal Services leadership and policies around summer and fall of 2016).

I will be back with more analysis (survival analysis). R code for data processing, analysis, and visualizations from this post can be found here.